Writing Sound Into the Wind

How Score Technologies Affect Our Musicking

Keynote-Beitrag

I. Abstracting from Sound

When I speak English, I speak with an accent that is informed by my years of growing up in India, by decades of speaking German, and by 12 years in Montréal – where a particular Franco-Quebecois English accent surrounds me every day.

Yet all this richness of sound in my spoken delivery does not appear in the written transcript of my lecture. This is not a bug of writing, it is its most important purpose – it is a method of abstracting meaningful discourse from the real sounds of a person’s speech acts. Writing is thus intentionally biased against the individuality of human speech sound production. In its most radical form, Chinese civilisations have used logograms to represent ideas rather than sounds. Logograms not only abstract and homogenise different accents like alphabetic languages do, they can be used to write entirely different languages with the same sign system. 1

A prominent cultural theorist of the Anthropocene, Nigel Clark, has pointed out that to abstract speech sound into writing is one of those many techniques and strategies that human societies employ to protect themselves from a volatile existence on an unstable planet and one of its collaterals in human life: those uncomfortably uncertain and imprevisible dangers inherent in social interaction (he mentions slavery as another such strategy). 2 Troubled by life’s existential vagaries, we want to at least imagine our existence as something stable, something writable, something readable – which might give us some respite from the incessant attacks of the furies of time:

Music for a whileShall all your cares beguile.

Wond’ring how your pains were eas’d

And disdaining to be pleas’d

II. Ontologies of Musicking

The fact that I can conjure up, out of thin air, a piece of music that most of you will know and instantly recognize – this is, on many levels, a quite improbable semblance of stability – and thus a remarkable success against those furies. After all, sound is really nothing but a fleeting flutter of the air around us. As an artist, I am intrigued by the fact that wind and sound are both cousins (both move individual air molecules) – and enemies (concert halls primarily exist to kill wind). So much of music is about wind, and yet real winds would devour it in an instant…

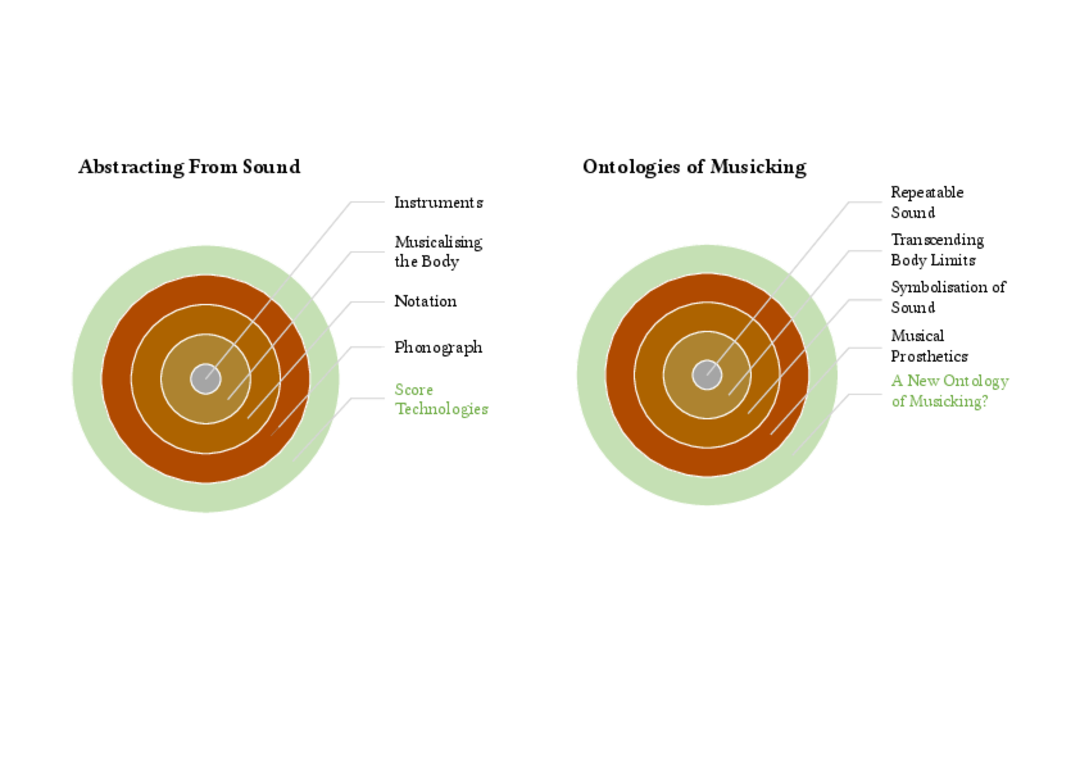

Our temporary victory against music’s ephemerality relies on a roster of techniques and technologies that have, step by step, changed the very ontology of musicking: when each became widespread in a culture, it redefined what people thought this thing called ‘music’ to be. These changes happened in five mutually interdependent expansions of musicality (fig. 1).

Figure 1 The gradual de-materialisation of sound making (left) and the apparently concomitant expansion of ontologies (right) for music making. Will the introduction of responsive and fluid score technologies once more change the very nature of what we call music?

1. INSTRUMENTS – The first step away from the origins of musicking in the human voice was the construction of things that can reliably (re)-produce a specific kind of sound, be it specific pitches or specific timbres or both – i.e., we call these things ‘musical instruments’. Instruments are an extraordinary achievement: while a human voice and its expressions may emerge from and must return into the wider flow of life, instruments consciously work against this entropic river – they fish out individual sounds for inspection, introspection, analysis and reproduction – and thereby give us one of the sweetest victories over time: to be able to make the same sound again. The instrument made sonic matter more reliable and repeatable: once we could manipulate and order sounds, such a repeatable sound could then play the role of a symbol.

2. MUSICALISING THE BODY – Instruments (as all tools) have the potential to make more and more reliably varied sounds than their inventors ever could have imagined. In order to realise these affordances, the human body must become intensely entangled with the materiality of the instrument, and in this process even the voice was redefined as an instrument. The second step to a new understanding of music came through such kinds of training, which is nothing else than an abstracted re-imagining of the body into a reliable tool for music making. Training the musician’s body to master an instrument or their own voice moreover made music transcend the range, the capacities of the human body alone: music became a prosthesis, something added to the human voice. Music behaviours did not only arise in a body but became stored in the interaction between a body and something stable – bones, woods, stones, metals. In other words, musicking now physically connected us to the world around us.

3. NOTATION – In many cultures then came notation: the purpose of this notation is to build on the double abstractions of the sonic and of the body and to further move sound making away from the furies of disappearance – in this case from the unreliability of memory: notation could establish an abstract musical form beyond the limited reach and temporal existence of the human body, in a ‘platonic’ space of shared cultural memory and shared ideas. Notation opens the affordances of the instrument (and the voice-seen-as-an-instrument), the symbolisation of sound, into a realm of abstract symbol manipulation, outside of sound.

4. PHONOGRAPHY – And the fourth paradigm shift was the invention of the phonograph 142 years ago: to be able to store and reproduce soundwaves first through direct inscription, later through data reconfiguration, has changed everything in musicking: We have the phonograph needle, and now: Death, where is thy sting? Phonography (and the technologies of sound generation and manipulation that stem from it) expands the prosthetic approach to sound production, our outsourcing of music away from the body: we now need no human body at all to make music appear – all we need is the right composition of minerals and metals – and music can manifest at any time.

Each of these technologies has added unforeseeable affordances to music making. Each of these new affordances also profoundly changed what humans thought it was about. All of them, as soon as they appeared, changed the very ontology of musicking.

While this text focuses on notation, we need to keep in mind that the act of notating and composing does not necessarily imply a creative act within the sonic – anything can be the composed: composing in sound is just a special case of composing ‘things’. The essence of notating a composition into a score lies in the act of stabilising the unstable. A score is not about what is notated, it is not essentially about sound – it is about laying down in writing precise relationships between aesthetically relevant ephemeral objects. These objects may well be sounds, but can also, as in Stockhausen’s Inori , be movements or, as in Scriabin’s Prometheus , colours. But what happens when new technologies call into question this very notion of defining a particular set of relations between aesthetic objects?

In this text, I propose that new kinds of scores will once again change what we think music or musicking is – that new score technologies constitute a fifth expansion in the ontology of musicking: Music, something we thought was a uniquely human expression, can now manifest itself as a technological, para-human entity that is deeply entangled with non-human agency. These non-human agencies enable us to imagine a score as something that is less fixed, more fluid than especially the history of Eurological music has taught us to assume.

III. Notational Perspective

In order to understand what fluidity could mean in the context of a score, we need to first take a look at what notation does when it transforms sounds into writing.

No notation can write down the complete four-dimensional reality of a sound. Any symbol for sound must thus select those elements or parameters of a complex sound phenomenon that are aesthetically relevant – to the writer of the score. As most of you know, in acoustic and psychoacoustic analysis one can represent sounds by about 20 dimensions, using such parameters as spectral centroids (which is a measure of sound brightness), formants, spectral synchronicity, and many more – including, of course, the two parameters preferred by traditional European notation: pitch and duration.

Music theory sometimes still includes other parameters, such as loudness, timbre, sonic texture – but these are actually quite complicated behaviours of sound, they are not parameters in the same way as pitch and duration: loudness depends on musical, spatial, perceptual context, timbre has a strong psychoacoustic and cultural component, sonic texture really is a placeholder term for everything else about a sound. The many dimensions of sound that I mentioned before essentially were brought in to replace the vagueness of terms such as loudness, timbre and sonic texture.

The notation most readers of this text would know best (i.e., common Eurological notation) 3 recognises this double standard – it uses clearly defined notation objects for pitch and duration (musical notes) and employs intentionally vague graphics and verbal instructions to notate loudness and timbre (articulation). It skips the notation of sonic texture entirely, because sonic texture is a meta-parameter, an emergent behaviour resulting from many independent phenomena.

Once we are clear about the fact that common Eurological notation picks and chooses which sonic properties it can represent in what kind of writing, it becomes equally clear that this bias of this type of notation is a contingent result of choice – it became established as the most efficient way to represent locally and historically circumscribed ideas about what is important in music making.

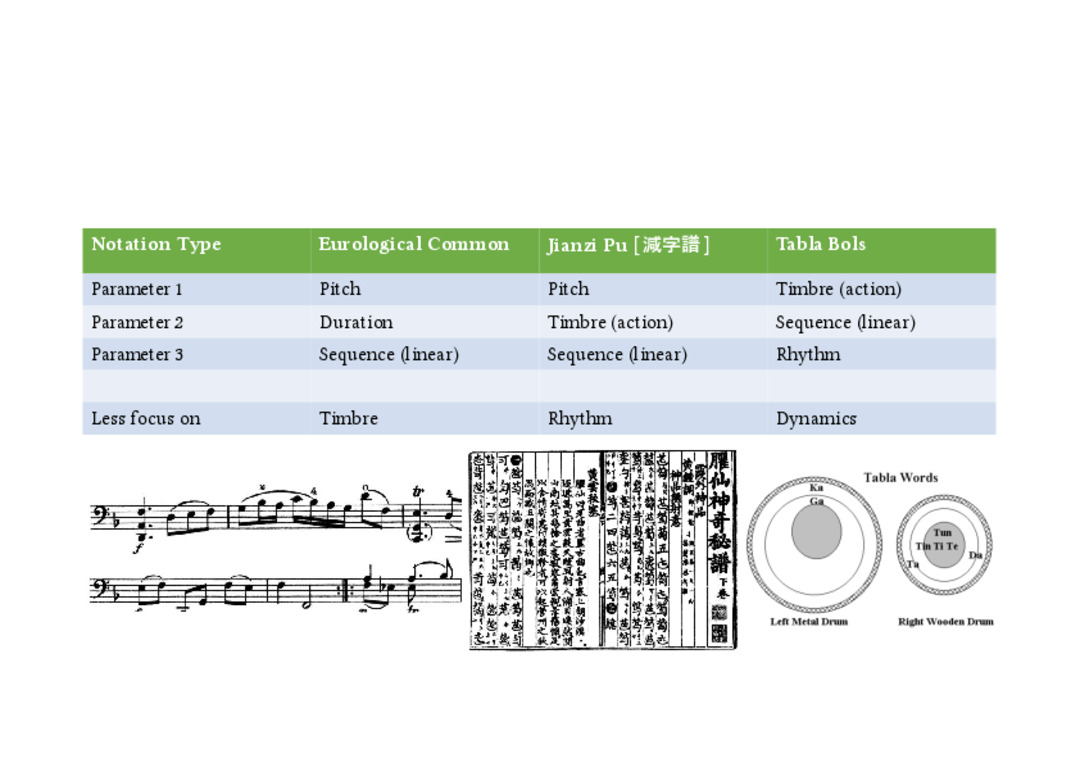

This means that if another musical tradition finds other parameters of sound more important, then their notation must be different in kind from common Eurological notation. I would just point to two notation systems that indeed function differently, but no less efficiently, to notate just those aspects of musical sound that are important to their users: the notation of Qin music in China and the Tabla Bol system in India (fig. 2).

We always talk about music as a time-based art. But Qin notation, for example, does not appear to be deeply and artistically interested in time’s flow at all. Decisions about duration and timing are left to the musicians in much the same way as decisions about instrumental timbre are left to the musicians in Eurological notation. Time is important to Qin musicking, but it is a concern of making, not of writing. On the other hand, Qin musicians obviously are very interested in timbre, for they notate the exact way to pluck a string. To Qin music notators, then, the sound of their music seems to be of more artistic relevance than how it moves through time – that, at least, is what their notation says.

Indian Tabla Bol notation, the notation for a rhythm instrument, on the other hand, must by necessity be interested in time. In this notation, time is notated in cycles – time is conceptualised variations on a repeatable time segment. In addition, Bol notation is deeply invested in timbre: the many possible ways of striking the drum with the bare hand and producing a specific drum sound are codified as complex notational objects. What a Bol notator, however, is not interested in – and therefore cannot notate easily – are: pitches (tablas are pitched instruments, but their pitches are not represented in the notation), non-cyclic rhythms, sounds produced by other means than the bare hand etc. It should be mentioned, and will become important for my argument, that Tabla Bols are not normally used as a written notation – they are an oral notation and therefore also offer the potential of becoming a real-time notation: notation that co-exists in synchrony with the music. Notation does not need to be ink on paper.

Figure 2 A simplified comparison between three notation systems (common Eurological notation, Chinese Jianzi Pu notation and Indian Tabla Bol notation) shows their differing notational perspectives.

Such biases with respect to which parameters should be represented in notation and which not, can tell us much about a musical tradition and its ontology of musicking. Hermann Gottschewski has called them “the perspective of a notation”. 4 Such a perspective always makes it easy to notate those aspects of musicking that a particular tradition cares about, and makes it very difficult or even impossible to notate other aspects that the inventors of a notation did not have in mind: hitting a table with a drumstick, playing a Qin to an orchestra conductor’s indications, notating subtle timbral shifts in an oboe.

I would like to make it clear that the notion of notational perspectives is not just a philosophical notion; it actually can – and should – be an analytic tool that could underpin any theoretical discussion of notation. Elsewhere, 5 I have listed the possible parameters and conducted a first analysis of the notational perspective of Schubert’s piano scores. There is no time to go into such detail here, I would nevertheless present a checklist of analytic categories that could help define notational perspective:

-

Notation Type: a superficial classification of the notation – is it primarily graphic with some symbols and some verbal instructions? Is it Eurological or Tibetan or Malaysian etc ? Is it oral, written or is it a collection of rules? Etc.

-

Notation Objects: which kinds of notation objects are used in the notation (notational objects: symbols that bundle several parameters.)

-

Internal Relations: Which type of notation is dominant, which one corollary, which one subservient?

-

Functions: Which parameters of music making are controlled by which type of notation?

-

Degree of Freedom: Which parameters are notatable, which ones are left to the performer/listener to figure out?

-

Impact on aesthetic experience: What does the notation want the listeners to focus on – i.e., which elements of the music are intended to convey aesthetic information?

-

Impossibilities: which musical phenomena are impossible/very hard to notate? 6

IV. Comprovisation

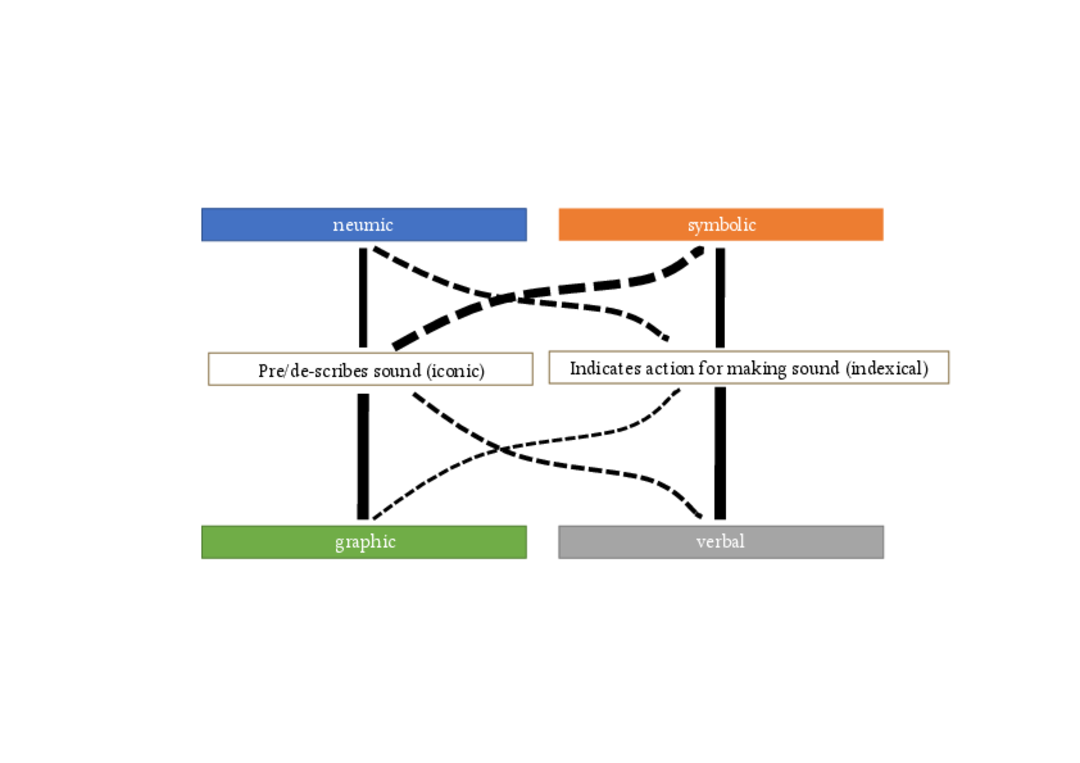

This list could lead one to assume that the intrinsically infinite range of possible notational perspectives would open up a similarly infinite field of notational techniques. That, however, does not seem to be the case. Rather, it appears that all written music notation can be read as a specific combination of four broad types: neumic, symbolic, graphic and verbal (fig. 3).

Neumes were the first steps towards notation: mnemonic signs attached to individual written words as aids to pronunciation, intonation or singing. It is a partly symbolic, partly iconic notation.

Symbolic notation developed (in Europe, at least) from neumes by inscribing them into the musical stave – a two-dimensional cartesian space of pitch () and time (). Symbolic notation, however, is not really cartesian, as the symbols used often are informational ‘objects’ that may come with their own temporal dimension (e.g. in the West) or may just denote a sequence without specific durations (e.g. in East Asia). 7

Graphic notation, then, is a fully notated Cartesian representation of the sonic space. It is particularly suited to represent non-discrete, continuous parameter changes (slides instead of scales). Mostly used as an iconic notation.

Finally, verbal scores either are extensions of a symbolic score (usually for unique actions that require no ‘portable’ symbol), or literary descriptions of moods or musical gaits – or are used to describe complex interactions between musicians. They can be indexical or symbolic.

In addition to these four types, notations can be characterised by whether they intend to convey a resulting sound or the action required to arrive at a sound.

Figure 3 The four basic notation techniques: two are iconic (neume-like and graphic notations), two are indexical (symbolic and verbal notations). Many scores contain instances of several of these techniques, in various hierarchies and layerings – these constellations are often unique, and might well serve to fingerprint a style or a piece. Note that these techniques are not only applicable to visual scores – they will also appear in audio or haptic scores.

Although all four types can in principle convey both, symbolic and verbal notations are more suited to indicating the action required to activate a sound, while neumes and graphic notations are more apt to be used as ‘icons’ (likenesses) for a sound.

Of course, any combination of these four types of notation may be used in parallel, even within one notation system: In many notational situations of Western standard notation, symbolic and graphic elements intermingle (e.g. the proportional rhythmic ‘look’ of a measure, although irrelevant to the notation itself, can greatly aid musicians in reading, especially in polyphonic situations.)

While iconic sound notations at first seem more intuitive, their very iconicity decisively limits the complexity and, more importantly, the possibilities for context-independence of the notated event: action notations are far more efficient in transferring precise musical ideas from one sound source to another, thus creating new sonorities from the same musical thought, and have consequently been highly successful in polyphonic, multi-instrumental and multi-traditional music.

Most contemporary scores employ a constantly shifting pragmatic mix between several of the basic varieties isolated above. Notation, the choices that those composers who are writing down their music make when they creatively use or invent a notational perspective, thus seems to be a question of imaginative craft – and, as such, deeply linked to the creative process. Notational perspective could thus be an additional analytic parameter for music theory.

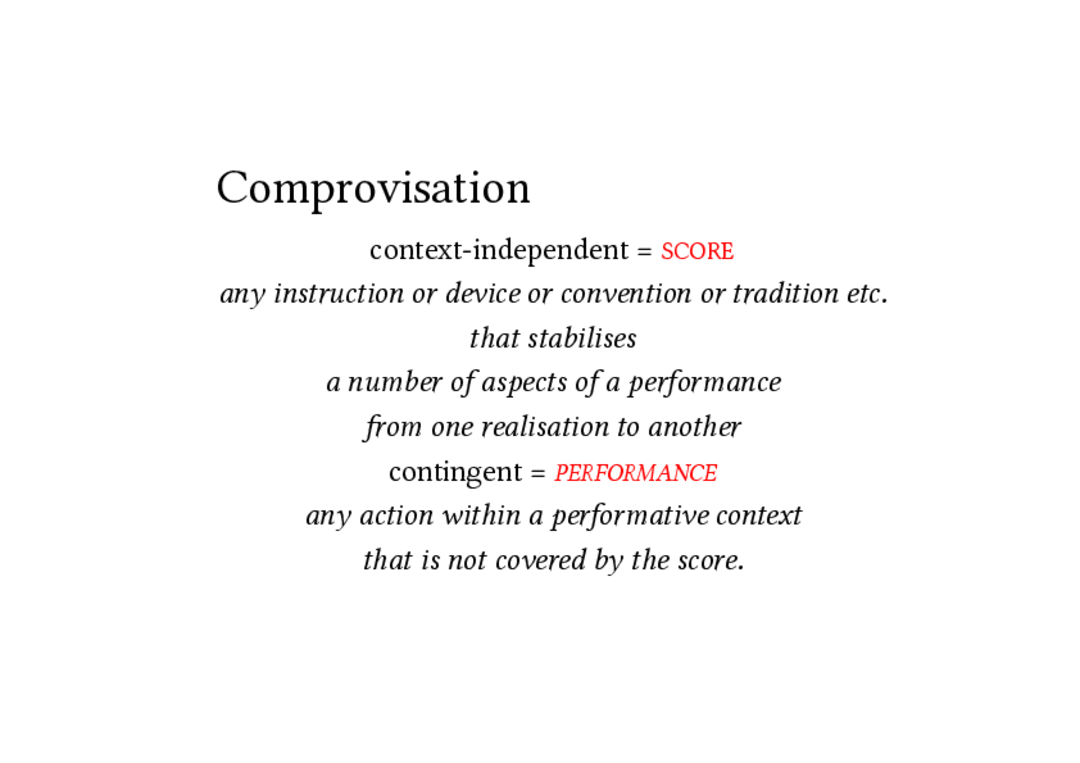

Using notational perspective as a globally useful stylistic concept to describe the diverse aspects of music making that people find variously either easy or difficult to notate may thus perhaps serve to help defuse the familiar musicological and categorial pseudo-feud between composition and improvisation, for it only differentiates between those aspects of a musical event that stem from score elements (and are thus part of a composition), and those that do not (and hence must be improvised in a contingent manner). Notational perspective thus subsumes both contentious terms in a wider perspective.

Twenty years ago, I adopted the portmanteau word comprovisation to designate “musical creation predicated on an aesthetically relevant interlocking of context-independent and contingent performance elements.” A key term in this definition is “aesthetically relevant” – it points to the necessity of conscious engagement by participants in a given musicking context with the repeatable/contingent dichotomy that pervades contemporary creative music practices.

Comprovisation thus is not one particular way of creative musicking, it is not in itself a technique or an analytical category. Thinking in terms of comprovisation is more a reminder to us that questions such as “Is this music composed or improvised, is it notated or not, does it have a score or not?” are not very helpful in order to understand a specific musical practice. Rather, one could ask: “How many and which aspects of this music are context-independent (i.e. composed) and how many and which are contingent (i.e. improvised)? And how is the relation or the ratio between composition and improvisation structured?” (fig. 4).

Figure 4 The definition of the terms score and performance used in this text. Following these definitions, each real-world composition or improvisation will presuppose the use of both score and performance elements in a unique mixture or layering. No music is entirely composed or entirely improvised – hence the term comprovisation to encompass both practices.

Comprovisation, then, could work as an inclusive descriptor for a field of creative music making. And notational perspective would then be that analytical and descriptive tool that would help to identify and triage a vast diversity of comprovisation practices.

V. Secondary Orality and Technological Scores

Up to this point, we have been speaking about scores as if they were stable objects that fix music – and thus make the ephemeral constellations of sounds that we call music a little more stable, moving them into the realm of platonic ideas – and this mostly by the marvellous technology of ink sunk into paper. Increasingly, however, people do not any more use ink and paper to interact with ideas. Walter Ong, in his book Orality and Literacy, 8 calls the decisive shift in human communication that is enabled by more recent technologies a “secondary orality”, i.e., an orality made possible by technology based on written instructions. We engage in secondary orality whenever we listen to voices and music on the radio, when we communicate by phone, when we compose sound on tape or at a digital audio workstation, when we talk to Alexa or Siri to get things done.

This new habit of secondary orality, this shift back towards ephemeral communication, is one of the defining aspects of computer technology. On our computers, writing becomes as ephemeral as singing – while singing can become as semi-permanent as writing. No wonder that the score, this incarnation of written music, has undergone significant revolutions over the past decades – and that these revolutions in scoring engender a fifth ontological expansion in musicking. It is my contention that we are currently living through a period of change where new types of scoring open up a new way of understanding what music is and how it exists.

Music sociologist Alfred Schütz has famously described the experience of growing older together at the same speed 9 as one of the great attractions of the concert format (and by extension of listening together to recorded music). All across the world traditional, and especially oral musicking always takes place within this social contract, while ink and paper scores, context-independent as they are, resolutely remain outside of it – these written scores rather embody the medieval concept of God’s time where past and future are already fixed (composed by God) – and we humans must wander through it, unaware of what will happen.

Such scores enact, like most writing, a linear concept of time. Linearity is the belief that the sequence in which we perceive things is significant and that we can discover/create meaning by making and/or examining the temporal order in which we perceive events. We all share this perceptual bias.

But in the age of secondary orality, technology enables us to develop scores that share the temporal flux of the concert in synchrony with the performers. I have called them situative scores: scores that deliver time- and context-sensitive information to musicians at the moment when it becomes relevant. Situative scores do not build on linear, pre-existing information structures. Information in situative scores is only available ephemerally, i.e., while it is being displayed or accessed in a particular context.

But such situative scores are not the only types of new score possibilities that have opened up with newer scoring technologies. The most important step in score technology may be the one where notation steps beyond the visual sense. Our eyes process information faster and more flexibly than our ears. This speed differential has been crucial for notation technology so far: a reader is faster than a listener, and a musician can thus absorb crucial information quickly, ‘offline’, and still let musical sound evolve at its eigen -time. Until very recently, the other senses, touch, body awareness and hearing 10 could not be processed at the speed of vision: they process information ‘online’, at roughly the same speed at which music itself moves – they thus do not afford the score reader the visual score’s advance knowledge of the future.

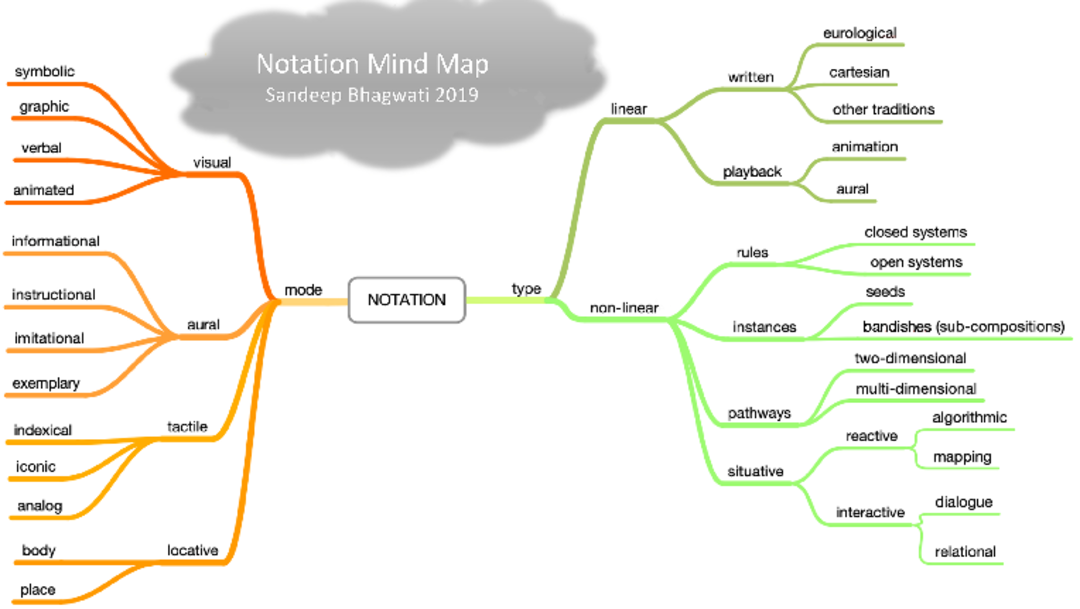

This is probably the reason why the default interface for situational scores still seems to be the visual score. Writing surfaces and/or display screens dominate the practice of scored music – but the confluence of technologies and techniques that I have just described, combined with comprovisation as a new way of understanding of what happens when we make music and, not least, the technique of what Jason Freeman has called “extreme sight reading” 11 when performing live-generated scores, have levelled this particular playing field. Now touch and hearing and proprioception could, in principle, indeed become notational interfaces, too. This insight opens up a vast field for notation outlined in the mind map in figure 5: a tree of possibilities of which the notation most music theory engages with constitutes just one branch, the one leading from symbolic to visual to linear to written to Eurological .

Figure 5 This notation mind map conceptualises the many possible ways in which notation could be conceived. To the left are the sensory modes that could be used to communicate with the performers and to the right are types of notation, the concepts for notation that could be used. While the right side has been explored over the last 50 years, the left side has been studied much less. On the other hand, the left side seems to offer a complete overview, while the right side is almost certainly incomplete.

Unfolding this map would take too much time and space. Suffice it to say, it clearly shows that notation has only just begun to unfold its potential for new types of music making.

VI. Invisible Notations and Non-Human Agency in Musicking

You may have noticed that at the beginning of my talk, I called ‘phonography’, the writing of sound, a post-human sound technology, because it does not require any sonic human intervention to make some musical noise. Recent technological developments take such post-human music production to the next level: Just as sound making once had been ported into the body of instruments, and music reproduction had been transferred into the body of electrical devices, music creation itself is now being implanted into computer software.

In 2014, for a curious concert at McGill University in Montréal, 12 three live-composition systems were made to play with each other: George Lewis’s Voyager system, Michael Young’s Prosthesis system and the system developed in cooperation between my lab, matralab and IRCAM: a software architecture we called Native Alien . For this concert, instead of being presented alongside each other, these three softwares were to be linked in a closed loop; the output of one should become the input of the other. By listening to this canon of AI composers, we hoped to discern their sonic and musical affinities and differences in compositional behaviour, their ‘personalities’, so to speak. As each of them reacted to the output of the other, we would be able to enjoy a composer’s battle in real time, something that up to then had been almost impossible to do.

At first, we toyed with the idea of linking them through data connections: but we quickly understood that this would tend to make musical developments too fast and complex for humans to follow. The three AI systems might be enjoying each other’s company, but we human listeners would be left behind. I joked that maybe we should get all the office computers from the building and put them into the audience seats – maybe they would understand (and enjoy) what was going on! In the end, we decided that these systems, which had all trained with human players, should instead send each other their audio streams, thereby slowing down to our human speed.

Each of these systems was fully able to produce a constant stream of interesting musical events in the language of non-idiomatic free improvisation. At least two of them could also have worked with more art music material, had the concert not been part of an iconoclastic popular music festival. But in recent years, AI music generation has made giant leaps towards everyday usage: Commercial website platforms such as Amper, AIVA, Ecrett and others 13 can generate idiomatic music in many styles, can imitate emotional dramaturgies and, in general, produce convincing musical output that usually equals or sometimes even surpasses the level of most human commercial music composers. The music they produce can be emotionally and sonically touching, especially for moviegoers. Not only does the day seem near when a movie soundtrack will not be composed by human composers, it doesn’t implausible that in a ‘Black Mirror -like’ near future a cell phone app could supply us with a continual, individually composed live soundtrack to our life in the musical language we prefer at the moment.

When generative music software can produce such musical realities that to most human listeners offer a viable alternative to human music making, then the kind of music theory that likes to deduce artistic intentions of a human music inventor from the written score will be beside the point. Such computer-generated musicking needs no teleology, because it skips all the symbol manipulations that in traditional human composing transform lived experience into the formal abstractions of a conventional score. And if music thus loses its symbolic dimension, music theory in turn must adapt not only its analytic tools, but its entire ontology. When, in such a scenario, listening to newly made music will become possible without someone playing instruments and without someone thinking curatorially about what should be played, and when thus no one ever shares the same music, when no one ‘grows older together’ – then musical sound can no longer be considered as a cultural intervention, then in the aesthetic sense it is not any longer a form of art. For art, after all, is a shared cultural expression, not a private experience.

In such autonomously growing sonic produce, sound will become the ‘ephemeral paper’ on which music is written directly, without any detour or filtering through human conceptualisation. In such an absence of sonic symbols, which elements of this new kind of non-teleological musicking should future music theory engage with? Which sonic objects and non-sonic concepts in it can still give rise to and underpin a symbolic discourse that can turn a relentless stream of sounds into an aesthetic subject fit for theoretical analysis? What should music theory seek to analyse such music for?

This is the point where thinking about and exploring new forms of notation can become relevant, where new types of scores can become crucial to a new ontology of sound and music. We saw how, in the previous ontologies, sound and music making moved away from the singing body, into instruments, mental concepts, scores, and sound-reproducing devices. On this path, music has become reified, a thing, even a commodity. But with the advent of automatic composition, with the probable rise of post-human music production and consumption, with ubiquitous and continual access to live sound making, we discover that the human body is crucial to make musicking a viable artistic proposition for humans.

For musical notation, however it may manifest itself, always pre-supposes a relationship between several human bodies, a transmission of ideas without sound. The score, the notation becomes the medium through which we communicate ideas and notions of the body – and not necessarily music. When music becomes a feature of the environment we live in, when it becomes an impenetrable jungle, scores tell us where to go, where to direct our ears to, how to find our way.

Through them, we become aware of a purpose of musical activity that lies beyond the activities of making and listening to sound. Sound is important to music, just as important as a white surface is to writing; Musickers use sound to write into it, they inscribe their artistic intentions onto the support of sound. Listeners read artistic intentions from the ‘pages’ of sound that they encounter. And what, then, is notation to the musickers of today?

To carry the simile further, if sound is their paper, notation is their ink. It is through notation, the properly chosen notation, la notation juste , that the artistic idea becomes tangible as a text. Notation has always, in a sense, been the act of inscribing sound onto reality, but the new autonomously evolving kinds of sonic reality have changed this dynamic; now notation inscribes ideas into the musical flow itself, notations, in the new reality of musicking, become ways of understanding the relationships, the structures, the patterns inherent in the incessant fluttering of air molecules around our ears. Instead of following divine commandments of a composer’s mind, a notion I have always found unnecessarily obsequious, reading a score will become akin to reading a map and choosing our path through its maze. Writing scores will become, not entirely unlike using interactive maps, an exercise in navigation, where the meaning of the music emerges from the multiple pathways we imagine for it, not only from the fairly limited sonic experiences we encounter on the way.

Thus, from now on, we may indeed just write our music into the wind.

References

- Bhagwati, Sandeep (2013), “Notational Perspective and Comprovisation”, in: Sound & Score. Essays on Sound, Score and Notation , ed. by Paulo de Assis, William Brooks, Kathleen Coessens. Leuven: Leuven University Press, 165–177.

- Clarke, Nigel (2020), “(Un)Earthing Civilization: Holocene Climate Crisis, City-State Origins and the Birth of Writing”, in: Humanities 9(1), 1. https://doi.org/10.3390/h9010001

- Freeman, Jason (2008), “Extreme Sight-Reading, Mediated Expression, and Audience Participation: Real-Time Music Notation in Live Performance”, Computer Music Journal (MIT Press) 32, no. 3, 25–41. https://doi.org/10.1162/comj.2008.32.3.25

- Gottschewski, Hermann (2005), “Musikalische Schriftsysteme und die Bedeutung ihrer ‘Perspektive’ für die Musikkultur – ein Vergleich europäischer und japanischer Quellen”, in: Schrift: Kulturtechnik zwischen Auge, Hand und Maschine , ed. by Gernot Grube, Werner Kogge and Sibylle Krämer, München: Fink, 253–279.

- Ong, Walter J. (1982), Orality and Literacy , London: Routledge.

- Purcell, Henry (1727), “Oedipus Z.583” (incidental music to: John Dryden, Nathaniel Lee: Oedipus: A Tragedy , Act III, 1. London).

- Schütz, Alfred (1951), “Making Music Together. A Study in Social Relationships”, in: Social Research Vol. 18, No. 1, 76–97, Johns Hopkins University Press. https://www.jstor.org/stable/40969255